The latest release of XenDesktop is now available as a Service Template for System Center Virtual Machine Manager.

I am assuming that my blog readers are already familiar with the concept of Service Templates, introduced with SCVMM 2012.

The “Service” is where the applications and OS are separate entities, they are layered across each other and deployed as a composed entity with intact references and dependencies. The Template is the representation of these dependencies and relationships. The Template is ‘deployed’ into a ‘Service’. The Service is the running machines.

For most of the past year we have been focused on simplifying the deployment of the XenDesktop infrastructure. After all, there are enough decisions to make without having to spend one or two days installing operating systems, applications, and configuring them. This is where the XenDesktop VMM Service Template comes in.

The whole idea is to take the monotonous tasks of deploying VMs, installing XenDesktop, configuring those infrastructure machines and reducing them to a few questions and time. Freeing you up to do more important things. Ant the end you can have a distributed installation of XenDesktop – the license server, Director, StoreFront, and Controller. All connected and ready to deliver applications or desktops.

Why not give it a go?

If you just want to see what this is all about, take a look here: http://www.citrix.com/tv/#videos/9611

If you head over to the XenDesktop download page, you will find a “Betas and TechPreviews” section. In there you can download the XenDesktop Service Template zip package. (The Service Template is the TechPreview not the version of XenDesktop).

By the way – there are four templates. One template to install a scaled out XenDesktop, another for an evaluation installation of XenDesktop. You will also find Provisioning Server templates that can also support scaling out or an evaluation installation.

After downloading the package, unzip it to a convenient location, then open up the SCVMM Console, Select the Library view, and click on the Import button in the ribbon.

You can always stop here and read the administration guide, it is short and has all the pretty screen shots that this post is missing).

Browse to the XML file in the package you just unzipped.

Then map your generalized Server 2012 (or Server 2012 R2) VHD / VHDX to the package by selecting the pencil (a red X appears when it is mapped – don’t ask me why a red X)

Just like the generalized virtual disk, if you want SCVMM integration enabled, then place the SCVMM installation ISO in your VMM Library and select that pencil icon to create the mapping.

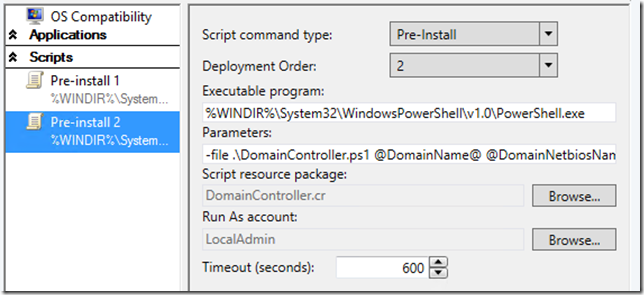

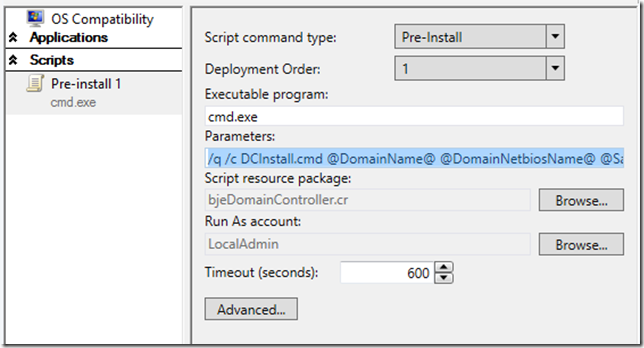

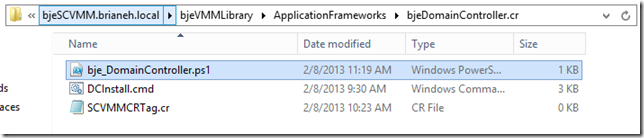

The Custom Resource should be uploaded from the package and contains the Citrix parts.

There is a really short import video here if you don’t want to read all of that.

After you import, you can deploy the XenDesktop infrastructure by simply right clicking the template and selecting Configure Deployment. Answer a few pertinent questions, select Refresh Preview for SCVMM to place the machines, and select Deploy Service. The name you give your Service will also become the name of your XenDesktop Site.

Now, go to lunch. When you return, connect to the console of the Controller VM, open Studio, and begin publishing desktops.

You can watch a shortened version of the deployment process.

The requirements are not different than any version of XenDesktop. There needs to be; a domain to join with DNS, a SQL Server, a VM Network from which the machines can reach those resources, a RunAs account for the (first) XenDesktop administrator, and a user account for XenDesktop to integrate with SCVMM.

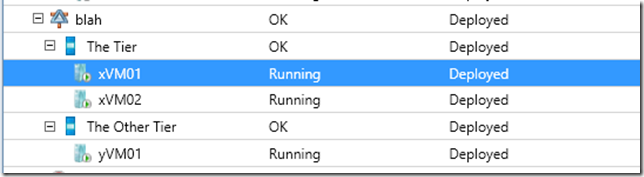

As time passes you may decide that you need additional StoreFront Servers or Desktop Controller servers. To do that, select your Service in the VMs and Services view, right click, and Scale Out. Select the tier and go. Additional Controller capacity is created for you and added to the Site, StoreFront requires some additional configuration so you can tailor the load balancing to your environment.

If you need to see that one, I have a video for that as well.

If you need support, you can get that in the XenDesktop forums, we will be there to help and respond to questions.

Please, give us feedback and let us know what you think.